Table of Contents

Supreme Court Justices raise First Amendment concerns in NetChoice oral argument

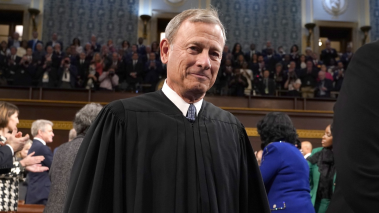

Chief Justice John Roberts, shown here at the 2023 State of the Union Address, cut to the heart of the First Amendment questions at stake in the NetChoice hearings before the Supreme Court today.

Today, the Supreme Court of the United States held oral arguments for two consequential cases surrounding the First Amendment rights of social media platforms: NetChoice v. Paxton and Moody v. NetChoice.

Each of the cases concerns a state law, one in Texas (Paxton) and one in Florida (Moody). The Court’s resolution of each promises to clarify what, if any, role the government may play in regulating how social media platforms curate, remove, and disseminate content.

Recognizing the insidious implications of allowing the government to meddle in the editorial decisions of private publishers, FIRE has spoken out in defense of the platforms and against government interference. And today, some of the Justices asked tough questions of the lawyers representing Texas and Florida that highlight what’s at stake for free speech in these cases.

FIRE to Supreme Court: Only you can protect free speech online.

News

In a new friend-of-the-court filing, FIRE asks the Supreme Court to defend the right of social media platforms to decide what content to host and promote.

Chief Justice John Roberts, for example, reminded Texas Solicitor General Aaron Nielson that the First Amendment is meant to curtail government censorship, not the moderation decisions of private companies. “The First Amendment restricts what the government can do,” he said. “What the government is doing here is saying, ‘You must do this. You must carry these people. You’ve got to explain if you don’t.’ That’s not the First Amendment.”

The Chief Justice then asked Nielson a few probing questions that cut to the heart of the matter:

Who do you want to leave the judgment about who can speak or who can’t speak on these platforms? Do you want to leave it with the government — with the state? Or do you want to leave it with the platforms — the various platforms? The First Amendment has a thumb on the scale when that question is asked.

Justice Brett Kavanaugh elucidated another aspect of this argument in the Florida hearing. “In your opening remarks, you said the design of the First Amendment is to prevent the suppression of speech,” he said to Florida Solicitor General Henry C. Whitaker. “And you left out what I understand to be three key words to describe the First Amendment: By the government.”

Later in the hearing, he elaborated on this point:

When the government censors, when the government excludes speech from the public square, that is obviously a violation of the First Amendment. When a private individual, or private entity, makes decisions about what to include and what to exclude, that’s protected, generally, editorial discretion, even though you could view the private entity’s decision to exclude something as, quote, “private censorship.”

In an amicus curiae — “friend of the court” — brief filed with the Court, FIRE voiced similar concerns. We argued that it’s unconstitutional for Florida and Texas to prohibit large social media platforms from moderating content based on their own standards. “The specific features of these two schemes don’t matter that much,” our brief states. “The point is, both impose state supervision over content moderation for private speech forums.” Allowing states to intervene ignores that the First Amendment was designed to protect against government power. If the laws are upheld, social media platforms would be required to permit speech that violates their rules, warned Paul Clement, counsel for NetChoice, discouraging users and advertisers from visiting and potentially forcing the sites to shut down access to their services in the states altogether.

As the public conversation surrounding these cases heats up and the Court weighs the arguments, we hope it keeps these fundamental First Amendment principles top of mind.

For similar reasons, FIRE also filed an amicus brief in another case that will be heard by the Court this term, Murthy v. Missouri, which surrounds government “jawboning” — that is, pressuring private social media platforms to suppress and deplatform disfavored views. In both NetChoice cases and in Murthy, we’re urging the Court to keep the government’s hands out of online content moderation.

“No one has a ‘right’ to have their words printed and distributed by their preferred publisher,” our NetChoice brief argues. Likewise, as our Missouri brief puts it, “No matter how concerning it may be when private decisionmakers employ opaque or unwise moderation policies, allowing government actors to surreptitiously exercise control is far worse.”

Whether through heavy-handed laws or behind-the-scenes coercion, government overreach into the content moderation decisions of private entities violates the First Amendment. And it’s wrong regardless of whether it appears to benefit the political left or right.

As the public conversation surrounding these cases heats up and the Court weighs the arguments, we hope it keeps these fundamental First Amendment principles top of mind.

Recent Articles

FIRE’s award-winning Newsdesk covers the free speech news you need to stay informed.

Texas tramples First Amendment rights with police crackdown of pro-Palestinian protests

Here’s what students need to know about protesting on campus right now

Kansas takes a stand for intellectual freedom